有朋友问我一个Fortran程序的问题,Fortran语言以前曾学过,只是时间上太过久远,所有的语法全还给老师了。看着类似天书的代码,不禁想在单机系统上已经有如Matlab这样的如此强大的数值计算软件为什么还要费力用Fortran来实现呢?!在多机或集群环境上可能会不一样,只是我对并行计算所知甚少。

集群环境上MPI使用相当多,也只是了解一些,从未实际使用过,正好趁现在有些兴趣便做了做实验。

在网上看到有个计算Pi的程序 <http://chpc.wustl.edu/mpi-fortran.html>,用Fortran写的,其实就是计算定积分:

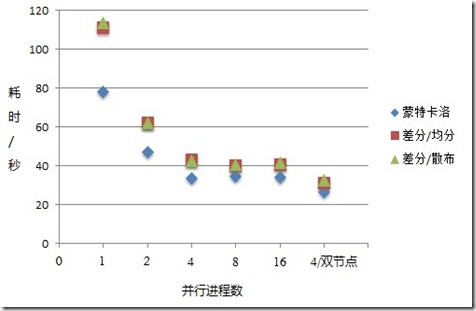

文中采用的是蒙特卡洛方法,我重写了一个C语言的实现,程序MPI_mc_pi.c,并在VMware环境下做了做实验,使用了2E9个采样点,分别做了单机不同并行进程数及双结点的对比,随并行度的增加,所需时间基本上是线性减少,由于测试机是双核四线程的Intel i5 CPU,当并行度超过4时便没有什么意义了,纯粹是为测试着玩。

后面又用差分的办法重新做了同样的计算,将[0, 1)分成2E9个网格,每个进程平分空间[0, 1),如进程1为[0, 1/nprocs),进程0处理最后剩下的部分[1-(nprocs-1)/nprocs, 1),见程序MPI_dc_pi.c,计算结果基本遵循线性减少规律,但是时间上却要比蒙特卡洛方法高出很多,特别是单进程的情况下,效率差了近50%。

于是又改进了差分程序,将空间平均分布,改成各进程散布在[0,1)空间上,即每个进程在网格采样上心nprocs为步进,参见程序MPI_dc_pi2.c,如此改进后,结果和均分采样空间基本差不多,没有显著变化。

后面又用双机做了Beowulf Cluster测试(VMware + X61),每个节点限制为2个进程,因为另一台机器是双核的CPU(X61),发现双节点情况下性能明显好于单节点。

将结果汇总后做成图表如下:

如图所示,两种差分方式基本一致,这点完全可以理解,但是蒙特卡洛方法为什么能快这么多,确实让人费解,难道是drand48()的效率比double型除法还快?想起来总觉不太可能,那就让数据说话吧:

分别对drand48()和double型除法做了测试,结果正如预料,drand48()的效率更差,分别执行1E10次的时间为:

drand48(): 4m21.065s double型除法: 3m27.399s

排除了这个原因之后,剩下的可能只能在drand48()里面了,drand48()虽是高精度的48位的伪随机数产生器,其结果应该还是不够平均和随机。评估drand48()的事咱不干,所以就做了个简单实验,只要能验证计算时间和drand48()生成的随机数不平均度显著相关即可。验证程序还是采用蒙特卡洛方法,只是将随机数的产生范围限定在[0.5,1]之内,不是之前的[0, 1],结果单进程计算时间为1m59.160s,双进程为1m35.185s,这足以说明随机数的随机度对计算时间有较大影响(Pi的值对与不对没关系,此时只关注计算过程所花的时间)。

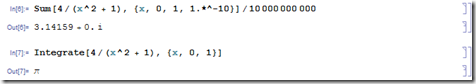

最后在Mathematica上也做了个测试,出人意料的是Mathematica只用几了秒钟就算出了1E10网格的差分,即便是定积分计算,也是完完全全的秒杀,太牛x了,不服不行!

本文相关代码:

mpiuser@Ubunut-X64:~/MPI$ cat MPI_mc_pi.c #include <stdio.h> #include <stdlib.h> #include <time.h> #include <mpi.h> int main(int argc, char** argv) { int myrank, nprocs, rc; unsigned long t, times, avg_times; double l_sum = 0, g_sum = 0, x = 1.0; times = 2000000000; MPI_Init(&argc, &argv); MPI_Comm_size(MPI_COMM_WORLD, &nprocs); MPI_Comm_rank(MPI_COMM_WORLD, &myrank); avg_times = times / nprocs; if (0 == myrank) { avg_times = times - avg_times * (nprocs - 1); } /* printf("%d: points: %lu\n", myrank, avg_times); */ srand48((myrank + 1) * (time(NULL) & 0xFFF)); for (t = 0; t < avg_times; t++) { x = drand48(); l_sum = l_sum + 4.0/(x * x + 1.0); } rc = MPI_Reduce(&l_sum, &g_sum, 1, MPI_DOUBLE_PRECISION, MPI_SUM, 0, MPI_COMM_WORLD); if (0 != rc) { printf("MPI_Reduce failed with error code: %d\n", rc); } else if (0 == myrank) { printf("PI result: %f/%lu = %f\n", g_sum, times, g_sum / times); } MPI_Finalize(); return 0; } mpiuser@Ubunut-X64:~/MPI$ cat MPI_dc_pi.c #include <stdio.h> #include <stdlib.h> #include <time.h> #include <mpi.h> int main(int argc, char** argv) { int myrank, nprocs, rc; unsigned long t, grids, avg_grids, start, end; double l_sum = 0, g_sum = 0, x = 1.0; grids = 2000000000; MPI_Init(&argc, &argv); MPI_Comm_size(MPI_COMM_WORLD, &nprocs); MPI_Comm_rank(MPI_COMM_WORLD, &myrank); avg_grids = grids / nprocs; if (0 == myrank) { start = avg_grids * (nprocs - 1); end = grids; } else { start = avg_grids * (myrank - 1); end = start + avg_grids; } /* printf("%d: [%lu - %lu)\n", myrank, start, end); */ for (t = start; t < end; t++) { x = 1.0 * t / grids; l_sum = l_sum + 4.0/(x * x + 1.0); } rc = MPI_Reduce(&l_sum, &g_sum, 1, MPI_DOUBLE_PRECISION, MPI_SUM, 0, MPI_COMM_WORLD); if (0 != rc) { printf("MPI_Reduce failed with error code: %d\n", rc); } else if (0 == myrank) { printf("PI result: %f with %lu grids.\n", g_sum/grids, grids); } MPI_Finalize(); return 0; } mpiuser@Ubunut-X64:~/MPI$ cat MPI_dc_pi2.c #include <stdio.h> #include <stdlib.h> #include <time.h> #include <mpi.h> int main(int argc, char** argv) { int myrank, nprocs, rc; unsigned long t, grids; double l_sum = 0, g_sum = 0, x = 1.0; grids = 2000000000; MPI_Init(&argc, &argv); MPI_Comm_size(MPI_COMM_WORLD, &nprocs); MPI_Comm_rank(MPI_COMM_WORLD, &myrank); for (t = myrank; t < grids; t += nprocs) { x = 1.0 * t / grids; l_sum = l_sum + 4.0/(x * x + 1.0); } rc = MPI_Reduce(&l_sum, &g_sum, 1, MPI_DOUBLE_PRECISION, MPI_SUM, 0, MPI_COMM_WORLD); if (0 != rc) { printf("MPI_Reduce failed with error code: %d\n", rc); } else if (0 == myrank) { printf("PI result: %f with %lu grids.\n", g_sum/grids, grids); } MPI_Finalize(); return 0; } 编译命令: mpiuser@Ubunut-X64:~/MPI$ cat build mpicc MPI_mc_pi.c -std=c99 -W -Wall -pedantic -D_SVID_SOURCE -g -o MPI_mc_pi mpicc MPI_dc_pi.c -std=c99 -W -Wall -pedantic -g -o MPI_dc_pi mpicc MPI_dc_pi2.c -std=c99 -W -Wall -pedantic -g -o MPI_dc_pi2

This is a message to the website creator. I discovered your page via Bing but it was hard to find as you were not on the front page of search results. I know you could have more traffic to your site. I have found a company which offers to dramatically improve your rankings and traffic to your site: http://anders.ga/-fn4j I managed to get close to 1000 visitors/day using their service, you can also get many more targeted traffic from Google than you have now. Their service brought significantly more traffic to my site. I hope this helps!